How Can You Centralize Bioprocessing Data Effortlessly?

Managing large amounts of bioprocessing data is a huge problem. Experimental campaigns can last months, and scientists are drowned in data which they spend weeks trying to structure so that it can be analysed.

Time is critical, as witnessed in the race to produce a vaccine in the COVID pandemic, and therefore all bioprocessing labs, regardless of size, need to adopt efficient ways to handle the constant flux of information in order to generate insight. But why is there so much data in bioprocessing? Let's take a step back and understand firstly what bioprocessing is all about.

What is Bioprocessing?

Bioprocessing is a really loosely defined term where you use whole cells or organelles to produce the desired product. Via metrology and control of method parameters, the objective of a bioprocess experiment is to generate higher yields of the product in the most efficient way possible. Products can vary but the most common forms are biotherapeutics, vaccines, specialty chemicals, biochemicals, or enzymes.

Advancements in the field of bioprocessing such as cell line engineering, upstream production, and downstream processing have driven the demand for specific biopharmaceuticals to increase, mostly monoclonal antibodies (mAbs), where the market can be expected to approach $140 billion by 2024. The rising demand reflects how biologics are expanding into new therapeutic areas, such as neurodegenerative and cardiovascular diseases; areas that are associated with an increased step-change in complexity and require an excellent data handling strategy in order to provide rapid and trustworthy insights.

Complementary to this, support for emerging modalities such as cell and gene therapies, nucleic acid-based medicines, and new workflows in engineered vaccines have arisen due to advancements in molecular biology.

In general, Bioprocessing can be divided into 5 categories/stages:

- Cell line development (CLD)

- Upstream Processing (USP)

- Downstream Processing (DSP)

- Formulation - fill and finish

- Quality control

All stages are interdependent and connected, therefore data acquired at any point will impact the next stages of the process.

For now, the focus will be on the USP portion of bioprocessing. USP focuses on understanding the most desirable conditions for production and then reproducing these conditions during scale-up/out. This also includes how you can purify target compounds with miniaturized purification.

In order to optimize your upstream bioprocessing strategy, a fundamental knowledge of the process and its characteristics is essential. To understand that knowledge you need to first see the data and interpret it in a way that allows you to make informed decisions.

Bioreactors generate a wealth of data from multiple sensors. For example, a typical Sartorius Ambr® 250 bioreactor system will contain tens of thousands of data per bioreactor. A typical mammalian process will last 14 days or even longer so the data pool really starts to amplify the longer you run your process.

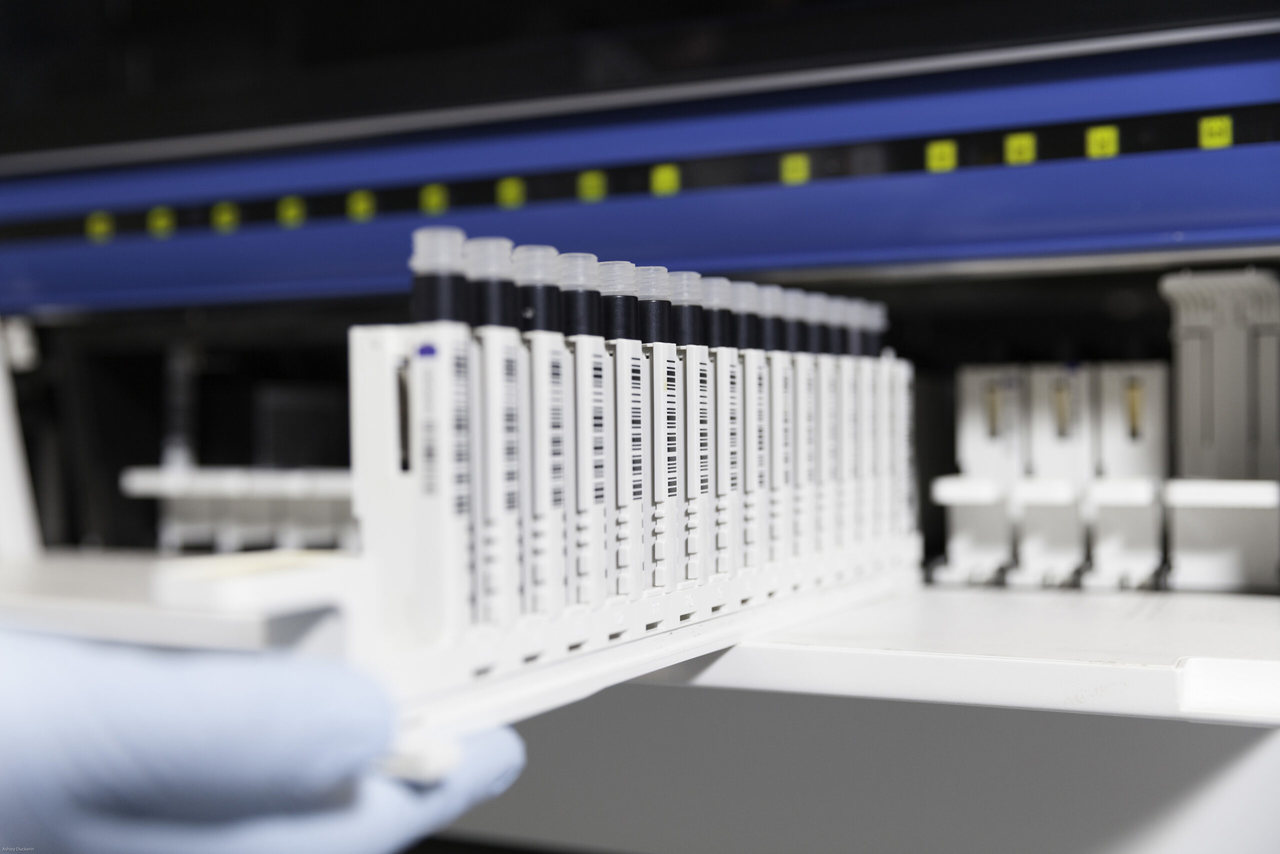

Moreover, each bioreactor is then coupled with in-/at-/off- line sensors which again contributes to the huge amount of data generated. Inline sensors are part of the bioreactors themselves such as pH probes and temperature sensors. Atline sensors are integrated devices that will analyze samples automatically sampled from your bioreactors to measure metabolites and cell counts.

An example of this device is the Nova Biomedical BioProfile® FLEX2 which can be integrated into the Sartorius Ambr® 250 bioreactor system. Offline sensors such as the Cedex Bio HT Analyzer are stand-alone units where bioreactor samples are manually supplied and the subsequent data requires intervention to link back to the rest of the bioprocessing data.

Metabolite analysis is extremely critical in process optimization as are measurements of yield, waste, cell count, and feed (usually glucose) which will indicate the health of the cells and how they are reacting to the particular setpoints in the bioprocess design.

These insights can even help predict the outcome of a process and provide feedback to the bioreactors for managing proportional integral derivative (PID) control loops. Such control loops can for example initiate feeding if glucose concentrations in a bioreactor fall below a certain threshold.

Why have a Unified Bioprocessing Dataset? Why does it matter?

The off-line sensor examples given above are only a subset of devices utilized in bioprocessing and one can quickly envisage a large repertoire of other devices one can use to analyze their bioprocess samples including screening for product titre and contaminant levels using label-free detection systems, such as incorporating enzyme-linked immunosorbent assays (ELISA) and quantitative polymerase chain reaction (qPCR).

During a bioprocess experiment, data will exist across disparate devices. The challenge for scientists now is to unify and structure datasets in order to analyze and visualize the experiment. A common challenge is that exports of data from these different devices will have different formats, and it will be down to the scientist to re-format them so that they align with the sample time points from the bioprocess.

This is where a major complexity and time limitation of bioprocess experimentation really begins. The sheer volume of data and the need to bring it together in the quickest and easiest way possible in order to enable the scientist to iterate on their experiments and make informed decisions quicker is a key requirement for research groups.

Precise matching off offline data to the exact bioprocess sample time points is critical for scientists to accurately understand their process. Off-line acquired data will not have the associated time of when the sample was taken but instead have the timestamp of when the measurement was taken making it difficult to unify and requiring increased FTE involvement. In addition to this, methods for collection and structuring of data can vary from manual USB stick collection to using Excel, emails, paper, in-house software solutions, and more, and reduced data integrity.

Furthermore, datasets structured in this way may not respect FAIR (Findable, Accessible, Interoperable, Reusable) principles, an issue is seen across the industry that prevents data from being translated into information that leads to knowledge.

Digitalization of this process is the only way to ensure data across different experiments are streamlined while also maintaining source data credibility and traceability. Only then can this knowledge be effectively exploited to improve production processes.

Find out more on how to address "The Data Challenge" in bioprocessing

Bioprocessing 4.0 - Digitalization of Process Development

The biopharmaceutical industry has lagged behind other industries, such as finance which have been using end-to-end digitalization since 2000. Bioprocessing involves complex living cells where variability is high therefore measurements and predictions of bioprocess performance are challenging.

Another reason is that culturing cells and purifying biologics was still in its infancy in the year 2000. However, the evolution of the biopharmaceutical industry has begun. Systems will leave behind paper-based bioprocessing, siloed data, manual processes, and adopt cloud-based platforms.

This far-future vision influenced by bioprocessing 4.0 is defined as the total end-to-end connected bioprocess meaning all systems and equipment are digitally connected. Bioprocessing pipelines will be fully automated and monitored/controlled remotely. Data from all devices fully ingested into the cloud will pave the way for artificial intelligence and machine learning.

Processes will start to have a digital twin which can be used to process simulations and predictions of your bioprocess which are powerful tools that will save companies money and time. All data residing in the cloud will have data analytics that can be applied easily to improve processes, regardless of manufacturing location.

Creating a successful therapeutic agent is the end goal in the biopharmaceutical industry. Although the market is steadily increasing, the number of approved biologics by the United States Food and Drug Administration (FDA) is still very low. In 2018, 21 biologics were approved, 22 in 2019, only 8 in 2020 and so far 11 in 2021.

Digitalization of bioprocessing and automation has been one of the significant contributors for ensuring Quality by Design (QbD} in different stages of the drug development pipeline. Biopharmaceuticals incorporating QbD approaches into their process and building it into the product will improve development strategies, and enhance success-rate of drugs towards the final stages of development

Want to explore this topic with us? Get in touch with us and have an open discussion about bioprocessing.

Other posts you might be interested in

View All PostsSynthace Expert Interviews: Cell and Gene Therapies with Dr Damian Marshall (part 2)

Why Bioprocessing 4.0 Is A Force To Be Reckoned With

Returning to Conferences: BioProcess International Europe